| Since summer of 1998 I have been involved in the design and assembly of distributed parallel computers based on commodity hardware and free software components. Such systems, commonly known as "Beowulf" systems, can provide supercomputer power at a fraction of supercomputer prices. To date I have designed, built, and managed three such computers. Some details are given below. |

![]()

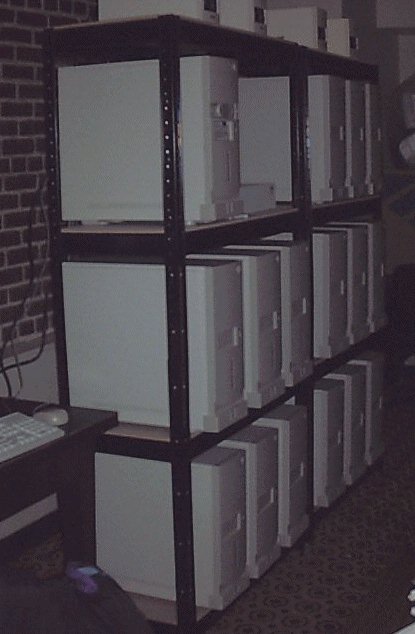

Grendel

MAIDO Lab

Dept. of Mech. and Aero. Eng.

University of Texas at Arlington

| This machine was designed and built

by me in late summer of 1998. The total cost at that time was around $35000.

This machine is composed of 27 nodes. The first 16 have an ASUS

P2B-D motherboard with two Pentium II 400 MHz CPU's,a 3.5 GB IDE hard drive,

and 512 MB of SDRAM.

The machine was expanded in summer of 2000 to include an additional 11 nodes, each composed of an ASUS P2B-D motherboard with two Pentium III 500 MHz CPU's, a 10.5 GB IDE hard drive, and 512 MB of SDRAM. That's a total of 54 processors and 13.5 GB of core memory. Each of the first 16 nodes The last 11 nodes have a 3COM EtherLink 905B 100 Mb/sec fast ethernet adapter. A Baystack 450 fast ethernet switch with a 4-port MDA expansion module is used for network switching. |

|

|

The Linux

operating system is used on all machines. We are currently running kernel

version 2.2.16 with MPICH 1.2.0. A dual 350 MHz Pentium II processor based

PC is used as the NFS server and DQS queue master.

This machine has a peak performance of 10 Gigaflops/sec. However, the largest sustained speed achieved was 1.55 Gigaflops/sec using a coarse grained CFD code running on the 32 400 MHz processors. We are currently using this computing platform to perform large-scale optimization with various parallel optimizations codes such as my parallel genetic algorithm (PGA). |

![]()

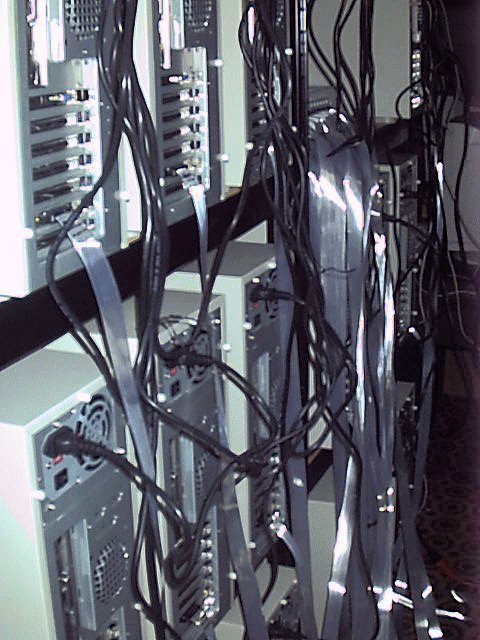

HighPACE

(High Performance Acoustics Computing

Environment)

Graduate Program in Acoustics

Penn State University

| I designed this machine

in the summer of 1999 and built it in early spring of 2000. The total cost

at that time was around $70000. This machine is composed of 16 nodes, each

with an AMI Megarum II

motherboard with two Pentium III 450 MHz CPU's and 512 MB of SDRAM for

a total of 32 processors and 8 GB of core memory. Each node also has a

12 GB IDE hard drive and a 64-bit PCI SAN Myrinet network adapter. A Myricom16-way

Myrinet switch is used for network switching.

The Linux operating system is used on all machines. We are currently running kernel version 2.2.13 with GM 1.2 and MPICH-over-GM version 1.2.13.

|

|

|

Benchmarking results show the communications

interconnect to be 2-3 times faster than conventional fast ethernet. Latencies

when using MPI were found to be smaller by a of 5-6 compared to fast ethernet.

This machine is to be used with parallel acoustics codes that tend to use more fine-grain parallel algorithms. Such codes would perform badly on a machine using fast ethernet for communications, particularly for smaller sized computational grids. The high bandwidth, low latency Myricom network should allow for higher parallel efficiencies for such fine-grained codes, even when relatively small computational grids are used. |

Here's a picture of me putting one of the HighPACE nodes together. It took almost 5 days to assemble and test the complete machine. |

![]()

MAIDO Cluster

(Multidisciplinary Analysis Inverse Design and Optimization

Cluster)

Dept. of Mechanical and Materials Engineering

Florida International University

| I designed this machine

in the summer/fall of 2003 and built it in early January of 2004. The total cost

at that time was around $100000. This machine is composed of 48 nodes, each

with a Tyan S2882

motherboard with two AMD Opteron 262 1.6 GHz CPU's and 512 MB Registered ECC DDR

PC-2700 for each processor.

That's a total of 96 processors and 48 GB of core memory. Each node also has a

40 GB IDE hard drive and two Broadcom Gigabit Ethernet network adapters on the 64-bit PCI-X bus.

Dell Gigabit and Fast Ethernet switches are used for network switching. The computing nodes were

assembled and tested locally by Alienware Computers in Miami, Florida. I am still in the process of testing and benchmarking this system. More details will be posted later. I made a short presentation describing the design and assembly of this system. Check the MAIDROC web site for more information about this computer.

|

|